-

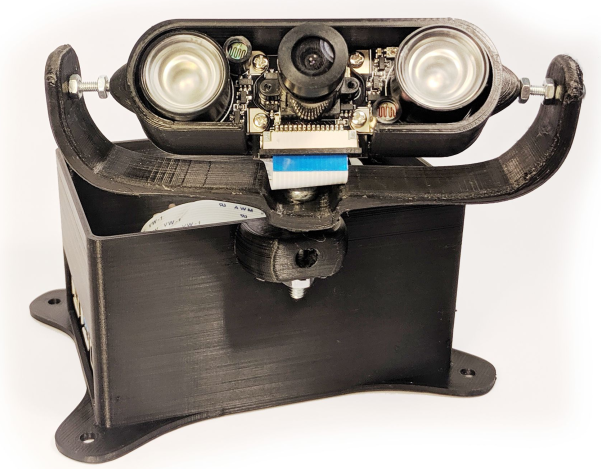

Our sensor research is focussed more on the data flow, network design and intelligent interpretation of real-time sensor data than the design of basic individual sensors. However, today it is possible to embed AI in the sensor which presents opportunities we are exploring. Our first example is deepdish which uses object detection and tracking to count people moving across virtual lines in a scene. In addition to the complexity of the measurement, the experimental sensor also raises issues of performance of the computation and the timeliness of the communication, as well as the power consumption which is typically constrained in ‘edge’ devices. In addition we are studying the behaviour of collections of sensors (and actuators) which act in combination to form a sensor node, transmitting more intelligent ‘events’ rather than basic sensor readings. One particular prototype is the Cambridge Coffee Pot.

-

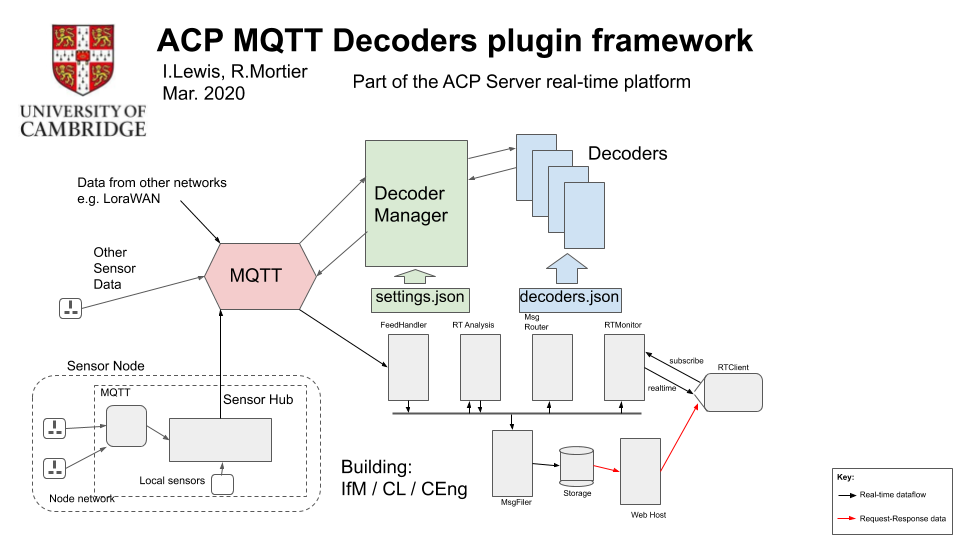

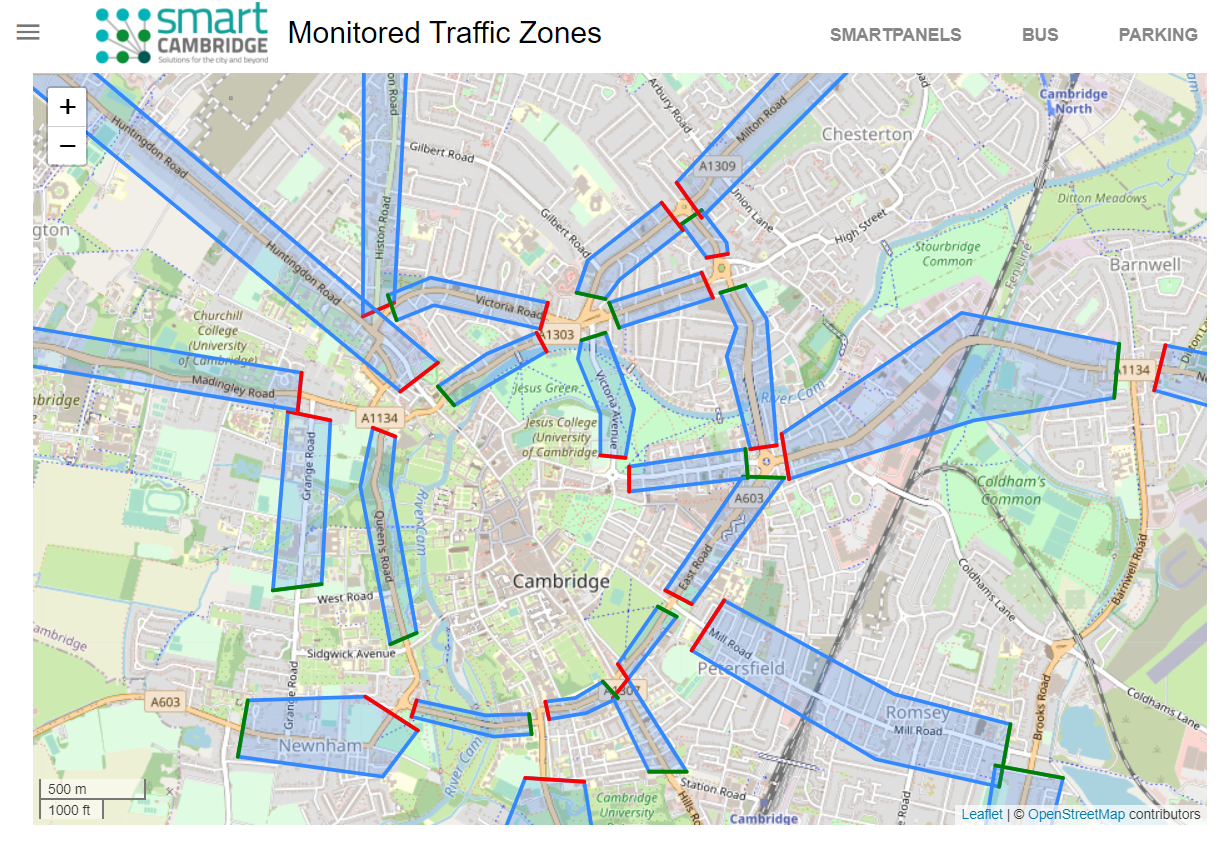

Data from sensors flows across many types of network structures and comes in many flavors, shapes and sizes. Often the original source of the data is very limited in its capability or capacity. Nevertheless we want the data to be associated with a unique identifier representing the source, a sensible timestamp representing the time the reading occurred, and the location of the sensor at the time the reading was taken. Then the reading itself must be transported through our platform and interpreted for a visualisation or analysis. Our research effort includes looking at the underlying networks, such as LoraWAN, Zigbee, WiFi and ModBus, to see if optimisations can be applied allowing devices to communicate as intelligent nodes without necessarily all data being sent to a central host. As the data enters the Adaptive City Platform we are generally wrapping the incoming data in a JSON message. We have architected an MQTT framework which propagates the data to a number of servers (and development workstations) for experimentation an analysis. A dynamic decoders framework is used to interpret the readings and add identifiers and timestamps where appropriate.

-

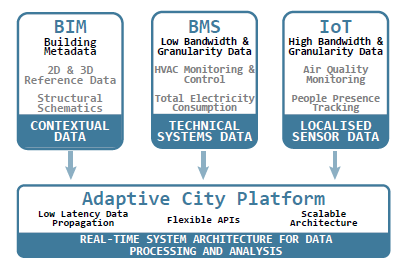

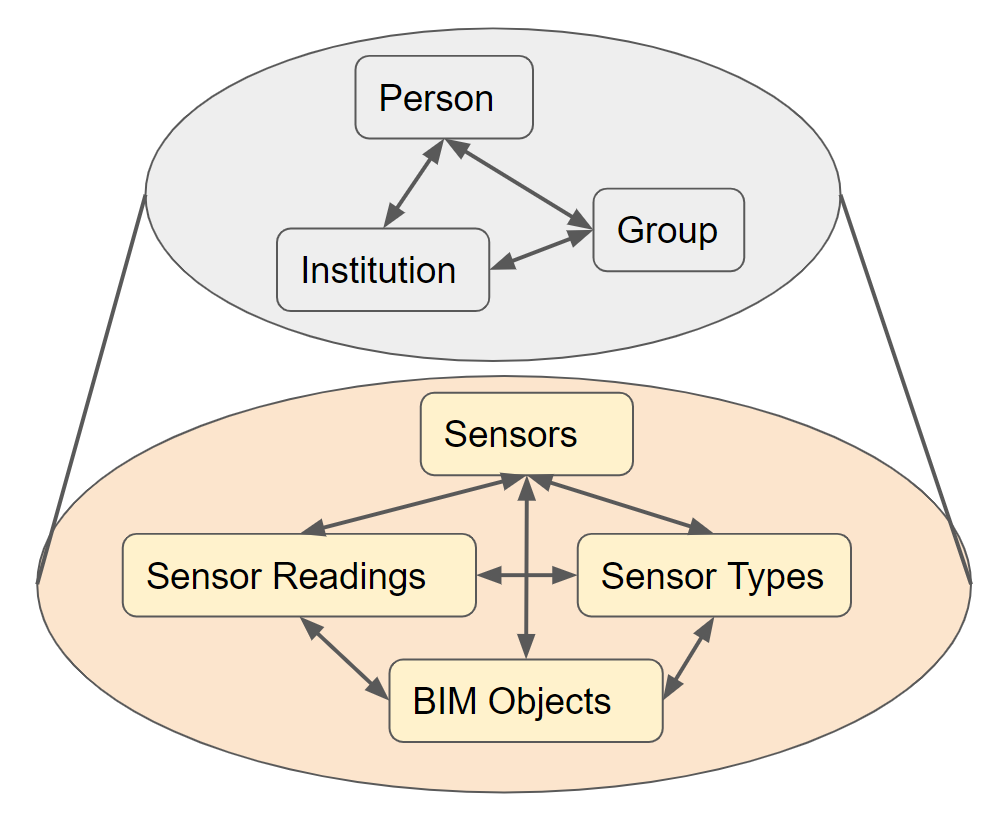

With our ACP Data Strategy we are exploring a broad range of issues in managing building and urban data. An essential aspect of our work is that it must address the issues of rapidly changing data (such as sensor readings) but also recognise that traditionally 'static' information (like building plans) do change over time. The multiple data types are intimately related and need to be considered in a more holistic way than currently. As a result we are researching a unified approach to managing the data in the following areas:

- Sensor readings

- These are spatio-temporal, i.e. usually associated with a time and location in the building or urban region but also possibly associated with a managed asset. We collect this data into a historical archive but also pump it through our stream processing platform such that analysis can take place in real-time.

- Sensor metadata

- We need to manage 'reference data' for the sensors and the sensor types, e.g. to record where stationary sensors have been placed and to help interpret the data the sensors are sending. Our approach is to be flexible i.e. it remains possible to integrate a sensor into the system with the absolute minimum of information, but that assumes the ultimate recipient of the data will have the knowledge of how to interpret it.

- BIM data

- We use this term to mean the relatively static data representing 'assets' in a building or urban region, such as might be stored in IFC [ref]. Storage methods and query tools for this data traditionally ignore the concept of time but note that the Adaptive City Platorm ensures all data is timestamped and the history maintained.

- Visual representation data

- A common requirement is to be able to draw a visual representation of the assets and sensors managed in the system and it is common (e.g. in IFC data) that the properties stored for a given asset are primarily useful to enable that asset (such as a concrete slab or pipe) to be drawn on a plan. On the Adaptive City Platform we separate the 'drawing' information into a dedicated API such that drawing common types of assets (e.g. building, floor, office, desk, microscope, sensor) can be generalized.

-

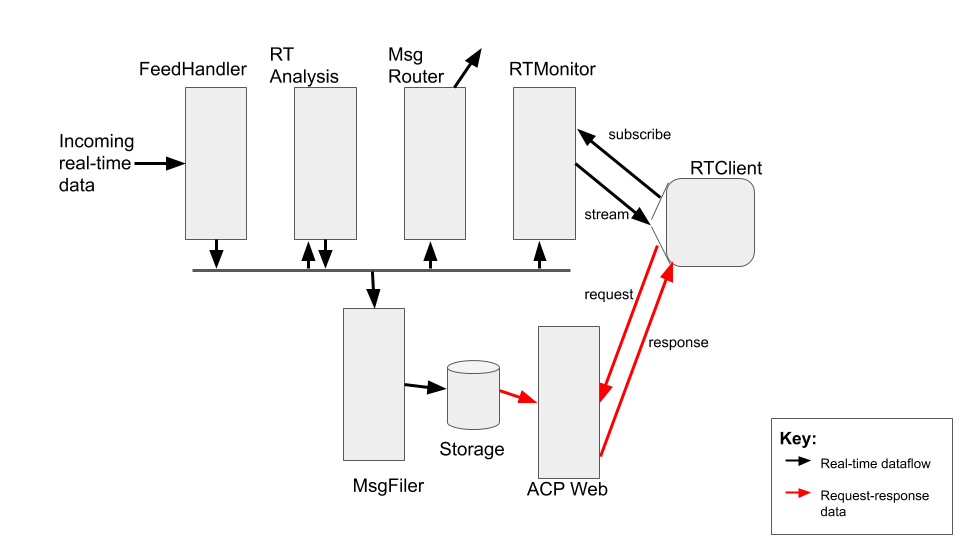

Our research has the data streaming as a central theme. I.e. our objective is that the real-time sensor data flows in from the networks, is distributed around our various servers and workstations, and is analysed and visualised continuously. At the core of the Adaptive City Platform is acp_server which provides a high-performance asynchronous message-passing development system based on Eclipse Vert.x. The system is modular, with some key components being FeedMaker which can receive data via HTTP, FeedMQTT which performs a similar function for MQTT data, MsgFiler which listens to the messages streaming through the platform and stores that data as required, RTMonitor which subscribes to messages on behalf of downstream clients connected via websockets (i.e. particularly web pages), and MsgRouter which can connect one

acp_serversystem to aFeedMakeron another server.Our research into this urban/in-building real-time platform aims to provide a general framework into which real-time analysis modules can be plugged. A key concept is this real-time analysis may produce derived messages (events) which then propagate through the Adaptive City Platform with the same status as the original data from which the event was derived. We are particularly interested in techniques generally applicable to sensor and event data (such as managing identifiers and timestamps effectively and measuring latency) as well as investigating analysis techniques (such as pattern recognition) suitable for real-time spatio-temporal data.

-

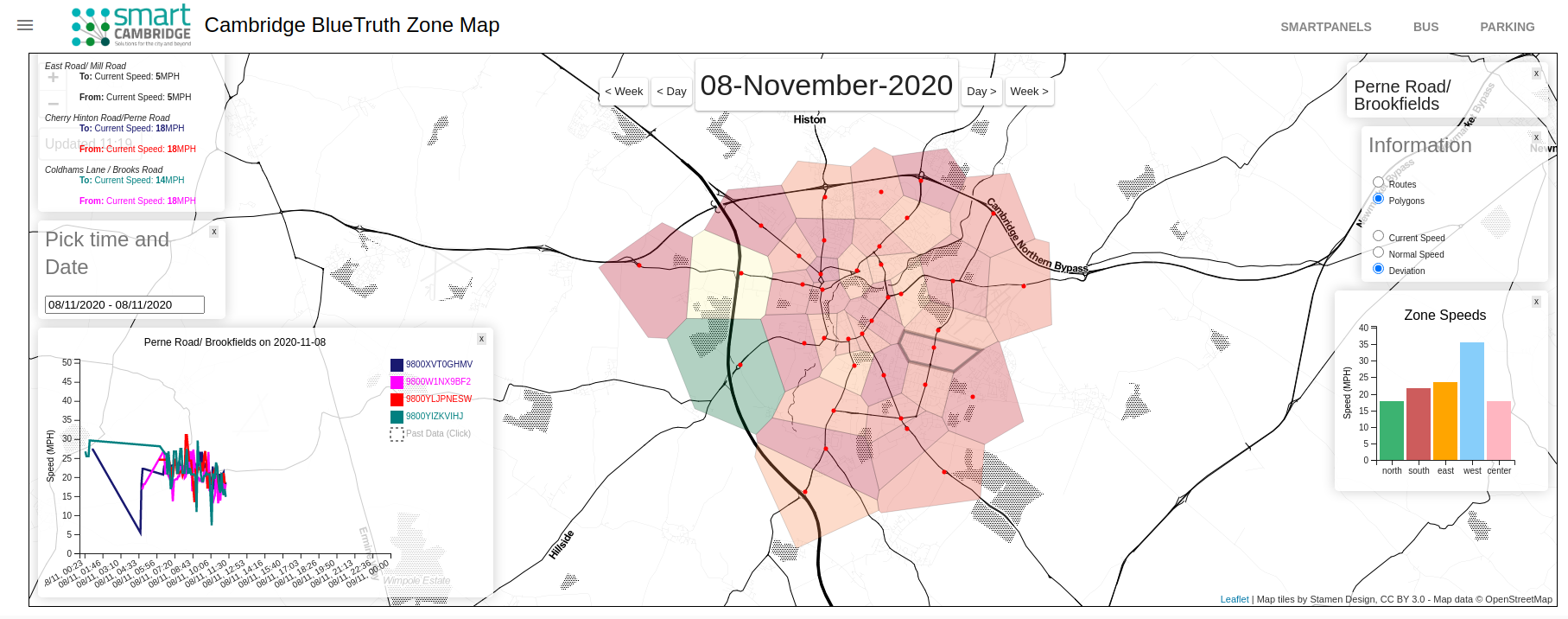

The Adaptive City Platform has the capability of serving web pages with HTTP restful API support and real-time websocket data subscriptions to both raw sensor data and derived events. We have a central research theme of considering how patterns in the real-time spatio-temporal data might be most effectively recognized and to support this we are investigating how the same data might be visualized, hoping this will help us in our pattern recognition quest. We believe it is unhelpful to rely on entirely static images to understand how we might process data that is changing in real-time. Similarly a

1:1 mapping of time in the sensor readings to updates of those readings on a web page, although easy to do, is too simplistic to provide much insight into the temporal dimension of any pattern hidden in the data, or indeed the relationships between sensors that might be spatially related.In addition, the web/visualization effort also allows us to explore the requirements for navigating our diverse data types (from building assets to sensor readings) in a coherent way. The web UI also helps us assess the efficacy of our privacy research.

-

Much of the work we are doing builds up to this significant opportunity. Firstly we wish to investigate how higher-level ‘events’ might be created and propagated through our platform derived from underlying simpler sensor readings. The sensor readings involved will have an implied spatial and temporal relationship. For an urban environment a simple example is traffic congestion. The traffic patterns are inherently spatio-temporal, these patterns can be learned and with a dense deployment of real-time sensors an abnormal situation can quickly be recognized. It is possible the abnormal pattern is similar to those in the past, perhaps a likely outcome can be predicted (maybe heavy traffic in Queens at 7am means a nightmare in uptown Manhattan at 8:30). In this case action can be taken based on the prediction such that the otherwise likely congestion is mitigated. In-building examples are less impactful (perhaps an early rise in meeting room occupancy on a humid day suggests it is likely to become uncomfortable later) but the ideas of intelligent real-time sensor data analysis and autonomous operation are similar.

-

For both building and urban information, the traditional security model has been to limit the access to the data to a small group of ‘super-users’. The ability to update the data would be limited to an even smaller subset of these. The emerging IoT implies a great change in the density of deployed sensors, the sensors will be more intelligent (e.g. face recognition vs. recording an environmental temperature) and, for the autonomous building or city of the future the sensor data will necessarily be real-time. For at least these reasons the privacy risk to the individual citizen will be greater in the future than today.

The uncomfortable facts are that sensors will densify and become more intelligent, the data will become more immediate, and the decisions or actions based on the data will be more impactful on the life of a typical citizen. This motivates our research into how the privacy aspects of the building or city of the future can be managed.